Toward a Superdeterministic Path-Integral Model of Quantum Mechanics

A few years ago, I heard this idea from quantum mechanics that subatomic particles behave “randomly.” That is, they can pop into existence, tunnel through walls, and just do incredible things that don’t happen in the macroscopic world. To wit, subatomic particles behave indeterminately, without cause. And so this set my mind thinking—can there really be such a thing as an uncaused event? I didn’t think so. I’ve always believed in determinism in the universe and that man himself has no Free Will.

But then later I found out about Bell’s Theorem, and how it “solved” the argument between quantum mechanics’ idea of entangled photons that communicate instantaneously (and faster than the speed of light), and Einstein’s idea of “hidden variables” that these entangled particles must carry with them. In short, when experiments based on Bell’s Theorem were conducted in later years, every experiment showed agreement with quantum mechanical theory. And so, if there was no real determinism governing the world, some people argued that this fact even allowed for the possibility of Free Will.

Curiously, one of the assumptions of the experiments based on Bell’s inequalities was exactly this idea of the Free Will of the experimenter. It was John Bell himself who stated that one of the loopholes in his theorem was superdeterminism in the universe. What is superdeterminism? Well, it’s just plain old regular determinism—but one which also applies to the experimenters themselves. In other words, it says the experimenters have no Free Will to choose the settings of their instruments. In short, the experimenters’ choices are embedded in the same causal chain as the “hidden variables” that the subatomic particles carry. And therefore, there could be no measurement independence.

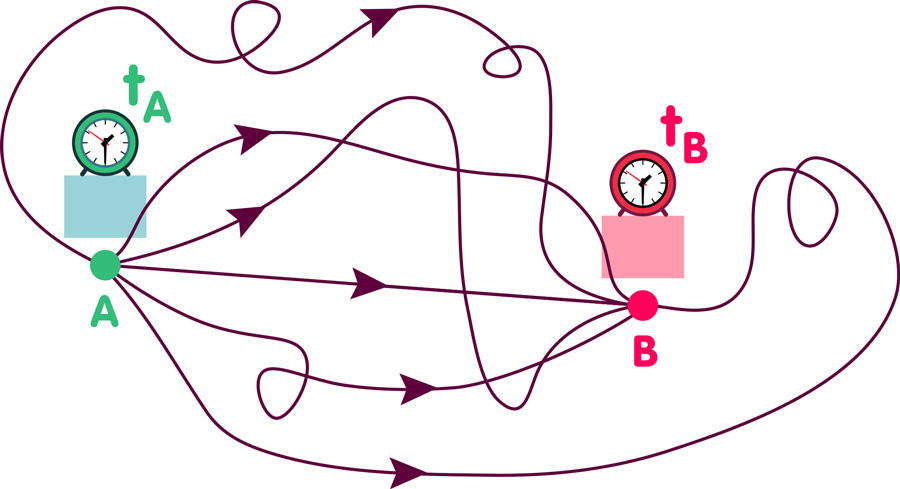

So just recently, I came upon this idea of Richard Feynman’s path integrals. Basically, Feynman says that when a photon or electron goes from point A to point B, it takes every conceivable path. And if you sum up all the amplitudes from these paths (some canceling each other out), you can calculate the probability of light going from point A to point B. I had read about Feynman’s path integrals before, but I had always thought this was just fancy math! I didn’t think it meant that literally every path is taken. But then I saw an experiment. A laser was reflecting off a mirror and bouncing to someone’s cell phone detector. And then they covered up the spot where the laser was bouncing off the mirror—and of course, the laser wasn’t seen. But when they placed some material next to where they had blocked the laser light—a diffraction grating sheet with a series of parallel lines—lo and behold, the laser light appeared again. Despite the fact that the center reflection point on the mirror was still covered, the light had reappeared—albeit in a different location.

So light really does take all paths from point A to point B. It can go from the emitter to Jupiter and back again. The light can do loops and zigzags. It is not just a theoretical possibility—it is a real phenomenon. But why don’t we see all these crazy paths manifesting? It’s because these paths are canceled out destructively by nearby paths—that is, their phases, when they arrive at the final point, destructively interfere. But like this laser experiment suggests, what happens when there is an object in the environment, nearby or remote, that blocks some paths that might destructively interfere and change the final amplitude? Couldn’t the environment act like a sort of “diffraction grating” and make the summed path change? And if the environment is constantly in flux, couldn’t such a phenomenon ultimately explain quantum “randomness”?

Here’s the most important thing to ponder—the photon can go to the end of the universe and back again and still contribute to the final amplitude that dictates the probability of the final path of light. Would such an extreme path be most likely canceled out? Yes, absolutely. But if the environment presented some boundaries for certain paths that would ordinarily destructively interfere with this wild path that traversed the universe and back, then this path could potentially make an extremely tiny contribution to the final probability.

So you see the conclusion here? If Feynman’s paths are real—and they certainly seem to be demonstrably so—then that means absolutely everything in the universe can contribute to the path that photons ultimately take. It means that absolutely everything is connected. The entire universe—all parts of it—are “entangled.” This is what superdeterminism is. It means that the entire universe is causally bound, and that every part of it contributes to these photon paths.

And so the reason for this apparent quantum indeterminacy is not “randomness” at all. It is simply that we cannot see or know all the environmental conditions that are impinging upon the photons.

So why do we see randomness at the quantum level and not in the everyday world where things are macroscopic?

To explain this, let’s take the example of Brownian motion that Einstein discussed in his 1905 paper. Essentially, Brownian motion was this observation under the microscope that small grains of pollen suspended in water moved erratically in a jerky, zigzagging fashion. What Einstein said was that smaller, tinier particles—atoms—were constantly bombarding these grains and pushing them to and fro. In short, the pollen grains were not significantly bigger than the atoms and therefore, when the atoms hit, the grains would get pushed about. However, if you took an extremely large object and placed it in the water, each individual atom’s momentum would be extremely small compared to the mass of the large object. And the millions of atoms impinging upon the object at the same time from all sides would cancel each other out. Simply stated, a large object would appear to rest steady in a sea of shaking atoms.

So in short, this is what is happening with subatomic particles. In the world of the very small, these particles are under influence from the environment that affects them greatly due to their small size. Physicists have believed in their fundamental randomness because they have not been able to see or understand the contribution from these surrounding forces.

But ultimately, it is Richard Feynman’s genius contribution that solves the problem. If all possible paths can contribute to a photon’s probability of going from point A to point B, then that means the entire universe can contribute to shaping that photon’s path. But if it’s a baseball going from point A to point B, you won’t see the effects because of a phenomenon called decoherence, which effectively averages out the quantum effects at the macroscopic scale. But in the world of the very small, these environmental perturbations are very observable—like the grains of pollen shaking in a watery medium.

So finally, I want to reflect on this so-called ‘conspiratorial’ aspect of the universe when we invoke the theory of SuperDeterminism— the idea that it conspires to deceive us.

Basically, it has been said, that if you deny measurement independence, then you must explain why the correlations between measurement settings and particle properties are so perfectly tuned as to make quantum theory look correct. Under SuperDeterminism, it feels like the universe is rigged to mislead us.

So why does it appear that the universe is rigging the results?

This is my best explanation.

When there an is interference pattern, the electron passes through the dual slit screen without decohering before it reaches the detector. Before decoherence, it doesn’t take a single, well-defined path. Instead, it behaves as if it traverses all possible paths, with none of them “rendered” as real trajectories. We know that only upon striking the detector does the electron take on real properties. But in the space where the electron travels before striking the detector, there was no information to resolve. Like a Graphics Processing Unit (GPU) in a computer that skips drawing unvisited terrain in a simulation, the universe simply didn’t need to render it. We could say, here, that the universe is following the principle of least action. But even this idea doesn’t really express what’s actually happening.

The universe simply “knows” which action the experimenter will take. Not because the universe is conscious, but because the history is already written in the block universe. And so from our time constrained perspective it feels like nature is always “playing a trick” on us. But it’s not. Nature is being conservative.

Where we measure a quantum particle it reveals its properties. Where we don’t measure, the properties don’t need to exist. The future already has a “plan” for where the particle will land. When there is no need for a definite path through a single slit, a “wave of probability” moves through both slits and produces an interference. Is this wave real? In the 4D block universe, it would seem unnecessary. But still from our time-bound existence, it is necessary to think of an electron or its proxy wave traveling towards the detector. But from the perspective of the block universe it has already made its collision with the detector. So where does it land? Why can’t we predict where it will go? It is as I have said, the answer is contained in Feynman’s path integral theorem. Where each individual photon or electron lands can be calculated from a summation over all possible paths, shaped by boundary conditions — and so it is true that the entire configuration of the universe, including its mass-energy distribution, contributes to the outcome. But in the 4D block universe, it would seem entirely wasteful and out of line with this principle of least action that a particle should explore every possible path when going from point A to point B. In truth, there would be just one path taken with no exploration. In essence, we have to regard the path integral model as simply a mathematical shorthand for determining probabilities from the perspective of our time-bound existence. But Feynman’s principle captures the essence of what is true about the universe. Absolutely everything contributes to the photon’s ultimate path.

And so how do we come to a better intuitive understanding of this phenomenon? I have said that according to Feynman’s principle, all paths are taken and this leads us to correct probabilities when we sum over paths. But then I have said, that ultimately in the block universe, there is only one path taken. Why exactly does a collection of possibilities collapse into a single event? We have to admit, that from a 4D perspective it is likely that there is no collapse. But from our conscious perspective as we make our way through time, what is this “collapse” of the wave function we observe? Is it not just the future being revealed to us?

And so we come back again to the hardest thing to comprehend — why can we not exactly predict where a subatomic particle will land? I have said several times that everything contributes to the photon or electron’s path. But how exactly is this contribution from the universe made?

But now I beg your patience. I will try an idea on you that you may regard as pure metaphysics. But it may be the best idea that we have for explaining this conundrum. If we take an old idea from the philosopher Saint Thomas Aquinas that there is a single chain of causality in the world, we understand by his reasoning that in order to get this entire chain set in motion, there needs to be a prime mover. In essence, there has to be one thing in the universe that sets the chain in motion. But even if we call this thing “God”, it solves nothing. It is because we can always ask –what is the thing that moved the prime mover? Can we really believe in an uncaused event? We might as well go back to the idea of quantum indeterminacy rather than accept such a scientific heresy. And so, what better idea is there than to accept that, somehow, the end of the universe’s chain of causality comes round to spawn the beginning of the chain? What if the universe were a perfect circle of causality?

If the universe were a perfect circle of causality, then we could see that for every event that takes place in this deterministic universe, absolutely everything before and after it would contribute to the properties of that event.

So then why can we not predict a subatomic particle’s exact position upon decoherence on a detector screen? It is again as I told you in my analogy of Brownian motion. There are way too many other particles contributing to its position and momentum. We have long been fooled that we can know the position and momentum of macroscopic particles because the quantum forces have always “averaged out”. So we couldn’t see the microscopic wiggles. But now that our science has allowed us to peer into this quantum world, suddenly we are amazed that everything is non-predictable and “random”. In short, the environment of the entire universe, both past and future contributes to this “random” wobble of subatomic particles. And it is impossible to tease apart what forces contribute where.

And so the closest thing we have to the truth of this idea that everything contributes is Richard Feynman’s path integral formulation. The boundary conditions of the travel of any subatomic particle is the entire universe. And although the great majority of these extreme paths contribute nothing to the final amplitude due to destructive interference–and indeed the overwhelming majority of the final amplitude is derived from local influences–still there are infinitesimally small contributions being made to these final probabilities. And although this is just a prediction and any given particle may land in less probable places, we know that with frequent repetition over time these probabilities are upheld. Flip a coin 10 times and you may indeed get 10 heads. But flip it 10 times a million instances, and you will arrive much closer to the real probability — 50:50. In short, these wave amplitudes circumscribe something absolutely real. And so if there is even the tiniest, most minuscule contribution of a path that is extreme and very remote from the detector apparatus of some quantum experiment, what other conclusion should we reach than the idea that the entire mass and energy of the universe contributes to the final path of a subatomic particle?

Lastly, what can we do from a practical standpoint to try and verify that such extreme and remote paths contribute to the flight of a photon?

What we can do is probe how seemingly minor, non-decohering changes in the environment — distant masses, temperature gradients, wall geometries — might shift the interference pattern. These subtle boundary changes might alter which paths constructively interfere, gently guiding the particle’s most probable trajectory. That is the kind of experiment a superdeterministic model invites: not to overturn quantum predictions, but to detect how global constraints influence them.

The following paper develops a formal framework for this idea.