The New York Times vs AI

Recently you may be aware the New York Times has filed a lawsuit against OpenAI due to its accusation that the Large Language Models (LLMs) trained on NYT content infringe upon their copyrighted material. So I ask you, if indeed the neural networks that generate these LLMs truly function like the human brain and detect patterns in data (ie images, text, sound, etc), and then can reassemble these generalized patterns into coherent essays — just like human beings — does the NYT really have a case? If indeed this mechanism of abstraction the AI uses is identical to human perception, then don’t they have to prevent every human being from also viewing their copyrighted material to protect their rights, to prevent copyright infringement?

Now the foregoing question might seem silly — certainly we know that the AIis a machine algorithm, and that people are living, breathing entities with free will. A human being will know when it is engaged in plagiarism, a machine will not. The NYT would argue that because the algorithms are merely “machines” and “software”, all that they can do is copy data and then spit out variations of this data. But is this really what the AI is doing?

I don’t believe so.

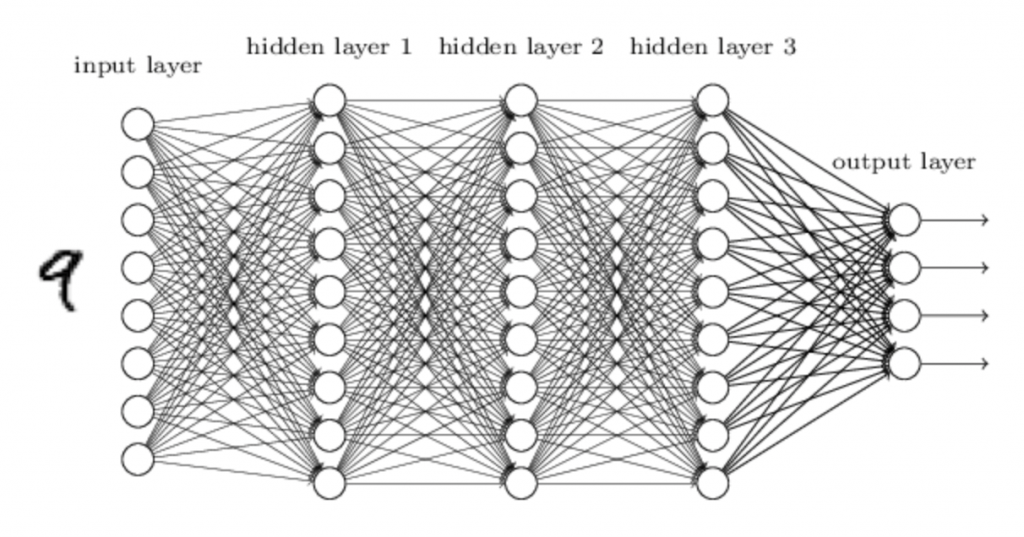

In short, what the neural network does is detect patterns. Like the human brain, the network, or more aptly, the perceptrons, have many layers that abstract out details in the data that it is processing. So if, for example, a character recognition network is fed a hand written image containing the letter ‘a’, there will be several layers to detect increasingly abstract features in that image. The first layer, or input layer will detect the individual pixels, the next layer might detect lines (ie, pixels aligned on some axis), a third layer might be a “corner” detector — i.e., where 2 lines meet — another layer might detect curves, and a final layer perhaps will detect the existence of a straight line and a curved line. Now, this is a gross simplification for the sake of argument here, but the important thing to understand about these perceptrons is that the layers in the network abstract out increasingly more general patterns in the data set. In short, the perceptron goes from the specific, the pixels, to the general, something like “the image contains a vertical straight line and a curved line that intersect”.

So finally, once a network is trained on this data, it will contain “weights” that basically represent the patterns or features the network has abstracted. When this network is then used to generate a character on its own, it will use a seed image, perhaps a few random pixels, and then it will start to fill in additional pixels based on the weights that exist in these network layers. In short, the network will create an image based on features that it has abstracted from the data. In no way does the network spit out verbatim any data set that it has received during its training.

If you have used Dall-E, Midjourney, Stable Diffusion or any other of these text-to-image generators that are currently popular, it is probably patently obvious to you that the creations are unique, sometimes bizarre, and sometimes highly imaginative. If one were to make a prompt to Dall-E for a “spider, wolf, octopus creature”, despite the fact that no creature like this exists, the model is able to generate an image that mixes features from all three of the above living things in sometimes very surprising ways. It can do this because the image generation is working at a higher abstraction level — i.e. using features. In short, the model knows what a foot is, and that is has a relationship with being near the ground. It knows that a thing called a “creature” is probably an animal and has a head, body and appendages. The gist of the argument here is, the model has never had to see the spider-wolf-octopus to make one.

So my main point is this — perceptrons were devised by scientists who were modeling neural pathways seen in the brain. In short, these networks work the same as the human brain. So if we say that we cannot allow these LLMs to generate content if they have been trained on NYT articles, then you pretty much have to conclude that no human being who has read a New York Times article should be allowed to write a news article themselves.

OK, so the cynic will say, look, no human being has read every single article the NYT has produced since 1851. And you would be correct. That’s not common. But let’s say I, personally, have read just about every book that Stephen King has ever written — should I then be prevented myself from writing a novel of horror? Will I have not absorbed Mr. King’s style, grammatical approach and vocabulary?

Sam Altman has indicated that, in reality, OpenAI doesn’t need to train on NYT content. And why would this be? It’s because there are plenty of other English language samples that will provide enough features of the English language to synthesize articles. And if the NYT content were left out of the training material, if someone were to then to make a prompt like “write an article in the style of the New York Times”, the only shortcoming of the model would be that it might not be able to produce the literary style of that publication. But certainly the model would not fail to make an interesting and detailed article in the English language.

Ok, so I know other skeptics might say I’m missing the point here. One might say, the problem is not with the LLMs per se, but rather with the persons creating the prompts. Can a “prompter” be guilty of copyright infringement by guiding the model to infringe with specific words? Let me give you a concrete example from the text-to-image universe. Recently I made an Apple iPhone game where I needed an icon image of a “mars rover”. Because I have loved all of the Pixar animated movies, I created a prompt like so:

“mars rovers climbing steep, rocky hill, in the style of Pixar”

What I quickly noticed was, for many generated images, the AI model spit out a mars rover that had cutes eyes with a binocular-like frame. Where did this come from? Well, no doubt, the AI model had been trained on content that used images from the movie Wall-E. Certainly it was statistically likely to use Wall-E, because the animated character was itself a type of rover with wheels. And of course both deal with the theme of technology and outer space.

So to get back to the question of the responsibility of those persons writing prompts — could I have made my mars rover look even more like wall-E by carefully crafting my prompts? Well, in a word, yes. In fact, I rejected many “rovers” that came out looking far too wall-E-like to avoid just such a scenario.

Again, we agree that the machine has no free will, but in some non-philosophical sense, human beings do have free will and can make the choice to actively plagiarize. To wit, one could carefully write prompts until one gets material that looks close to infringing copyright.

And now you might ask me, after I just argued that the network models go from the specific to the general, are you now reversing yourself by saying that indeed plagiarism is possible by using these models? Let me clarify with an example.

If you take an image model that has been trained only on countless images of Disney’s Donald Duck — say from various movies and cartoons — if you then ask the model to make you a “duck swimming on a pond”, you cannot in the least expect it to produce a natural, brown mallard duck with a green head. However, if the model were trained on real living wild ducks as well as cartoons and drawings and animations, then your prompt might very well work as you expect. However, on such an image model that were trained on all things, if indeed you asked for a “duck swimming on a pond, in the style of Disney”, the likelihood of receiving back Donald Duck is statistically probable. If you ask for a “cartoon duck standing upright in a cute little sailor suit, in the style of Disney”, then it’s overwhelmingly likely you will get a version — albeit, a never been seen version — of the Disney character Donald Duck. Why? It is because the words in the prompt dictate the features of the drawing. The prompts are telling the image model to return something very specific. Will the image model spit out an identical copy of Donald Duck from some cartoon or still frame of a movie? Absolutely not. But it would look close enough that if you decided to print up t-shirts with that image and sell them on Ebay, someone is likely coming after you.

So the gist of the argument is, the LLMs are not inherently copyright infringing. However, using very detailed and highly specific prompts, one could craft an essay or an image that would easily qualify as infringement. But this is the exact same circumstance as humans. Humans are not inherently copyright infringing, but they can remember exact instances of things they have seen and reproduce them. So the LLMs are not guilty by default.

In some ways the argument is much like the argument conservatives use when they say “guns don’t kill people, people kill people”. Obviously this is true. But still one might say that if there were no guns around, then there’s no chance someone gets killed by a gun.

I would say, in their lawsuit, the New York Times doesn’t want any guns around.

But let me tell you something. AI is coming. There is no stopping it.